Creating a set of resources for a storage

In the KUMA console, a storage service is created based on the set of resources for the storage.

To create a set of resources for a storage in the KUMA console:

- In the KUMA console, under Resources → Storages, click Add storage.

This opens the Create storage window.

- On the Basic settings tab, in the Storage name field, enter a unique name for the service you are creating. The name must contain 1 to 128 Unicode characters.

- In the Tenant drop-down list, select the tenant that will own the storage.

- You can optionally add up to 256 Unicode characters describing the service in the Description field.

- In the Retention period field, specify the period, in days from the moment of arrival, during which you want to store events in the ClickHouse cluster. When the specified period expires, events are automatically deleted from the ClickHouse cluster. If cold storage of events is configured, when the event storage period in the ClickHouse cluster expires, the data is moved to cold storage disks. If a cold storage disk is misconfigured, the data is deleted.

- In the Audit retention period field, specify the period, in days, to store audit events. The minimum value and default value is

365. - If cold storage is required, specify the event storage term:

- Cold retention period—the number of days to store events. The minimum value is

1. - Audit cold retention period—the number of days to store audit events. The minimum value is 0.

- Cold retention period—the number of days to store events. The minimum value is

- In the Debug drop-down list, specify whether resource logging must be enabled. The default value (Disabled) means that only errors are logged for all KUMA components. If you want to obtain detailed data in the logs, select Enabled.

- If you want to change ClickHouse settings, in the ClickHouse configuration override field, paste the lines with settings from the ClickHouse configuration XML file /opt/kaspersky/kuma/clickhouse/cfg/config.xml. Specifying the root elements <yandex>, </yandex> is not required. Settings passed in this field are used instead of the default settings.

Example:

<merge_tree>

<parts_to_delay_insert>600</parts_to_delay_insert>

<parts_to_throw_insert>1100</parts_to_throw_insert>

</merge_tree>

- If necessary, in the Spaces section, add spaces to the storage to distribute the stored events.

There can be multiple spaces. You can add spaces by clicking the Add space button and remove them by clicking the Delete space button.

Available settings:

- In the Name field, specify a name for the space containing 1 to 128 Unicode characters.

- In the Retention period field, specify the number of days to store events in the ClickHouse cluster.

- If necessary, in the Cold retention period field, specify the number of days to store the events in the cold storage. The minimum value is

1. - In the Filter section, you can specify conditions to identify events that will be put into this space. You can select an existing filter from the drop-down list or create a new filter.

After the service is created, you can view and delete spaces in the storage resource settings.

There is no need to create a separate space for audit events. Events of this type (Type=4) are automatically placed in a separate Audit space with a storage term of at least 365 days. This space cannot be edited or deleted from the KUMA console.

- If necessary, in the Disks for cold storage section, add to the storage the disks where you want to transfer events from the ClickHouse cluster for long-term storage.

There can be multiple disks. You can add disks by clicking the Add disk button and remove them by clicking the Delete disk button.

Available settings:

- In the Type drop-down list, select the type of the disk being connected:

- Local—for the disks mounted in the operating system as directories.

- HDFS—for the disks of the Hadoop Distributed File System.

- In the Name field, specify the disk name. The name must contain 1 to 128 Unicode characters.

- If you select Local disk type, specify the absolute directory path of the mounted local disk in the Path field. The path must begin and end with a "/" character.

- If you select HDFS disk type, specify the path to HDFS in the Host field. For example,

hdfs://hdfs1:9000/clickhouse/.

- In the Type drop-down list, select the type of the disk being connected:

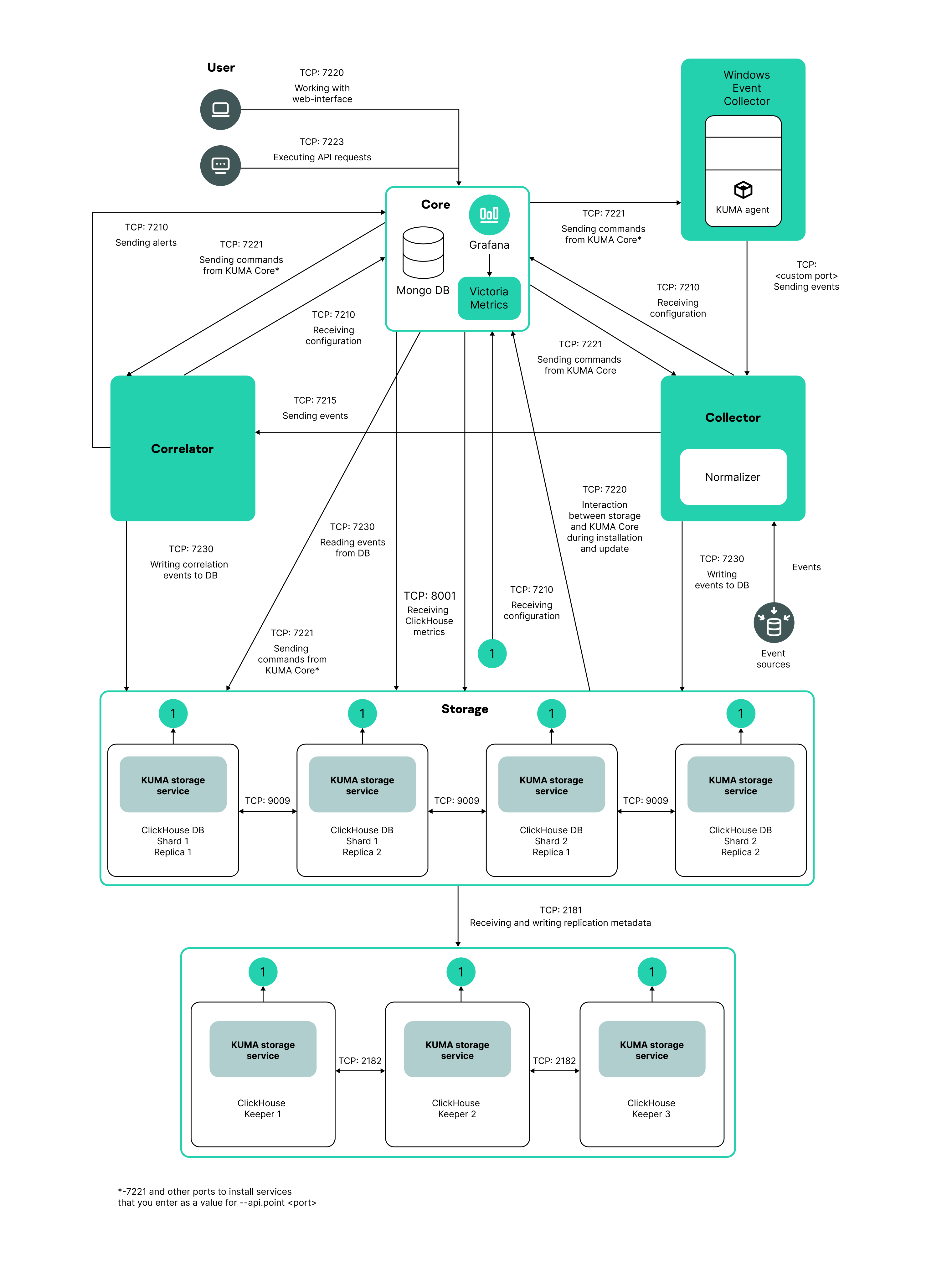

- If necessary, in the ClickHouse cluster nodes section, add ClickHouse cluster nodes to the storage.

There can be multiple nodes. You can add nodes by clicking the Add node button and remove them by clicking the Remove node button.

Available settings:

- In the FQDN field, specify the fully qualified domain name of the node being added. For example,

kuma-storage-cluster1-server1.example.com. - In the shard, replica, and keeper ID fields, specify the role of the node in the ClickHouse cluster. The shard and keeper IDs must be unique within the cluster, the replica ID must be unique within the shard. The following example shows how to populate the ClickHouse cluster nodes section for a storage with dedicated keepers in a distributed installation. You can adapt the example to suit your needs.

Example:

ClickHouse cluster nodes

FQDN: kuma-storage-cluster1-server1.example.com

Shard ID: 0

Replica ID: 0

Keeper ID: 1

FQDN: kuma-storage-cluster1server2.example.com

Shard ID: 0

Replica ID: 0

Keeper ID: 2

FQDN: kuma-storage-cluster1server3.example.com

Shard ID: 0

Replica ID: 0

Keeper ID: 3

FQDN: kuma-storage-cluster1server4.example.com

Shard ID: 1

Replica ID: 1

Keeper ID: 0

FQDN: kuma-storage-cluster1server5.example.com

Shard ID: 1

Replica ID: 2

Keeper ID: 0

FQDN: kuma-storage-cluster1server6.example.com

Shard ID: 2

Replica ID: 1

Keeper ID: 0

FQDN: kuma-storage-cluster1server7.example.com

Shard ID: 2

Replica ID: 2

Keeper ID: 0

- In the FQDN field, specify the fully qualified domain name of the node being added. For example,

- On the Advanced settings tab, in the Buffer size field, enter the buffer size in bytes, that causes events to be sent to the database when reached. The default value is 64 MB. No maximum value is configured. If the virtual machine has less free RAM than the specified Buffer size, KUMA sets the limit to 128 MB.

- On the Advanced Settings tab, In the Buffer flush interval field, enter the time in seconds for which KUMA waits for the buffer to fill up. If the buffer is not full, but the specified time has passed, KUMA sends events to the database. The default value is 1 second.

- On the Advanced settings tab, in the Disk buffer size limit field, enter the value in bytes. The disk buffer is used to temporarily store events that could not be sent for further processing or storage. If the disk space allocated for the disk buffer is exhausted, events are rotated as follows: new events replace the oldest events written to the buffer. The default value is 10 GB.

- On the Advanced Settings tab, from the Disk buffer disabled drop-down list, select a value to Enable or Disable the use of the disk buffer. By default, the disk buffer is enabled.

- On the Advanced Settings tab, In the Write to local database table drop-down list, select Enable or Disable. Writing is disabled by default.

In Enable mode, data is written only on the host where the storage is located. We recommend using this functionality only if you have configured balancing on the collector and/or correlator — at step 6. Routing, in the Advanced settings section, the URL selection policy field is set to Round robin.

In Disable mode, data is distributed among the shards of the cluster.

The set of resources for the storage is created and is displayed under Resources → Storages. Now you can create a storage service.

button.

button.