Contents

- File systems and network

- Contents of the VFS component

- Creating an IPC channel to VFS

- Building a VFS executable file

- Merging a client and VFS into one executable file

- Overview: arguments and environment variables of VFS

- Mounting a file system at startup

- Using VFS backends to separate file calls and network calls

- Writing a custom VFS backend

Contents of the VFS component

The VFS component contains a set of executable files, libraries and description files that let you use file systems and/or a network stack combined into a separate Virtual File System (VFS) process. If necessary, you can build your own VFS implementations.

VFS libraries

The vfs CMake package contains the following libraries:

vfs_fs– contains the defvs, ramfs and romfs implementations, and lets you add implementations of other file systems to VFS.vfs_net– contains the defvs implementation and network stack.vfs_imp– contains the sum of thevfs_fsandvfs_netcomponents.vfs_remote– client transport library that converts local calls into IPC requests to VFS and receives IPC responses.vfs_server– server transport library of VFS that receives IPC requests, converts them into local calls, and sends IPC responses.vfs_local– used for statically linking the client to VFS libraries.

VFS executable files

The precompiled_vfs CMake package contains the following executable files:

VfsRamFsVfsSdCardFsVfsNet

The VfsRamFs and VfsSdCardFs executable files include the vfs_server, vfs_fs, vfat and lwext4 libraries. The VfsNet executable file includes the vfs_server, vfs_imp and dnet_imp libraries.

Each of these executable files have their own default values for arguments and environment variables.

If necessary, you can independently build a VFS executable file with the necessary functionality.

VFS description files

The directory /opt/KasperskyOS-Community-Edition-<version>/sysroot-aarch64-kos/include/kl/ contains the following VFS files:

VfsRamFs.edl,VfsSdCardFs.edl,VfsNet.edlandVfsEntity.edl, and the header files generated from them, including the transport code.Vfs.cdland the generatedVfs.cdl.h.Vfs*.idland the header files generated from them, including the transport code.

Creating an IPC channel to VFS

Let's examine a Client program using file systems and Berkeley sockets. To handle its calls, we start one VFS process (named VfsFsnet). Network calls and file calls will be sent to this process. This approach is utilized when there is no need to separate file data streams from network data streams.

To ensure correct interaction between the Client and VfsFsnet processes, the name of the IPC channel between them must be defined by the _VFS_CONNECTION_ID macro declared in the vfs/defs.h file.

Below is a fragment of an init description for connecting the Client and VfsFsnet processes.

init.yaml

- name: Client

connections:

- target: VfsFsnet

id: {var: _VFS_CONNECTION_ID, include: vfs/defs.h}

- name: VfsFsnet

Building a VFS executable file

When building a VFS executable file, you can include whatever specific functionality is required in this file, such as:

- Implementation of a specific file system

- Network stack

- Network driver

For example, you will need to build a "file version" and a "network version" of VFS to separate file calls from network calls. In some cases, you will need to include a network stack and file systems in the VFS ("full version" of VFS).

Building a "file version" of VFS

Let's examine a VFS program containing only an implementation of the lwext4 file system without a network stack. To build this executable file, the file containing the main() function must be linked to the vfs_server, vfs_fs and lwext4 libraries:

CMakeLists.txt

project (vfsfs)

include (platform/nk)

# Set compile flags

project_header_default ("STANDARD_GNU_11:YES" "STRICT_WARNINGS:NO")

add_executable (VfsFs "src/vfs.c")

# Linking with VFS libraries

target_link_libraries (VfsFs

${vfs_SERVER_LIB}

${LWEXT4_LIB}

${vfs_FS_LIB})

# Prepare VFS to connect to the ramdisk driver process

set_target_properties (VfsFs PROPERTIES ${blkdev_ENTITY}_REPLACEMENT ${ramdisk_ENTITY})

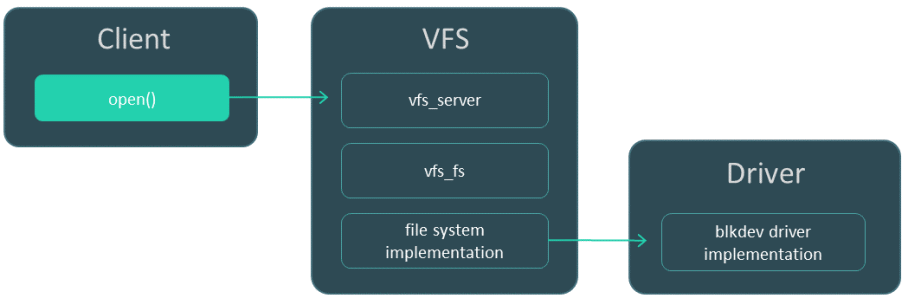

A block device driver cannot be linked to VFS and therefore must also be run as a separate process.

Interaction between three processes: client, "file version" of VFS, and block device driver.

Building a "network version" of VFS together with a network driver

Let's examine a VFS program containing a network stack with a driver but without implementations of files systems. To build this executable file, the file containing the main() function must be linked to the vfs_server, vfs_implementation and dnet_implementation libraries.

CMakeLists.txt

project (vfsnet)

include (platform/nk)

# Set compile flags

project_header_default ("STANDARD_GNU_11:YES" "STRICT_WARNINGS:NO")

add_executable (VfsNet "src/vfs.c")

# Linking with VFS libraries

target_link_libraries (VfsNet

${vfs_SERVER_LIB}

${vfs_IMPLEMENTATION_LIB}

${dnet_IMPLEMENTATION_LIB})

# Disconnect the block device driver

set_target_properties (VfsNet PROPERTIES ${blkdev_ENTITY}_REPLACEMENT "")

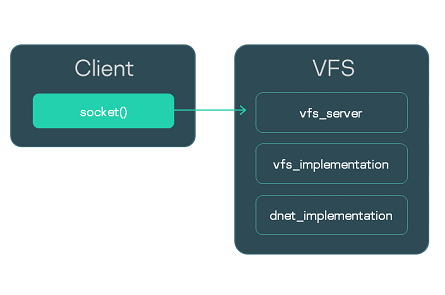

The dnet_implementation library already includes a network driver, therefore it is not necessary to start a separate driver process.

Interaction between the Client process and the process of the "network version" of VFS

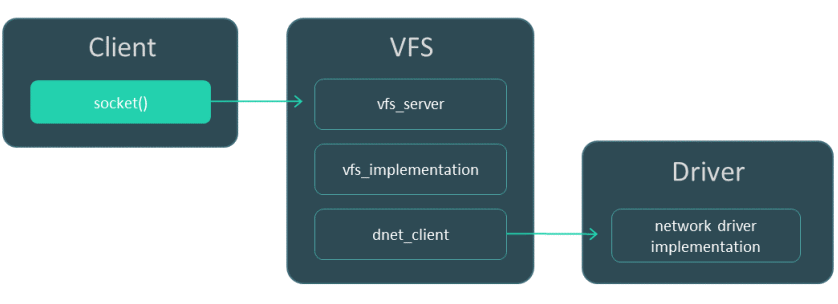

Building a "network version" of VFS with a separate network driver

Another option is to build the "network version" of VFS without a network driver. The network driver will need to be started as a separate process. Interaction with the driver occurs via IPC using the dnet_client library.

In this case, the file containing the main() function must be linked to the vfs_server, vfs_implementation and dnet_client libraries.

CMakeLists.txt

project (vfsnet)

include (platform/nk)

# Set compile flags

project_header_default ("STANDARD_GNU_11:YES" "STRICT_WARNINGS:NO")

add_executable (VfsNet "src/vfs.c")

# Linking with VFS libraries

target_link_libraries (VfsNet

${vfs_SERVER_LIB}

${vfs_IMPLEMENTATION_LIB}

${dnet_CLIENT_LIB})

# Disconnect the block device driver

set_target_properties (VfsNet PROPERTIES ${blkdev_ENTITY}_REPLACEMENT "")

Interaction between three processes: client, "network version" of VFS, and network driver.

Building a "full version" of VFS

If the VFS needs to include a network stack and implementations of file systems, the build should use the vfs_server library, vfs_implementation library, dnet_implementation library (or dnet_client library for a separate network driver), and the libraries for implementing file systems.

Merging a client and VFS into one executable file

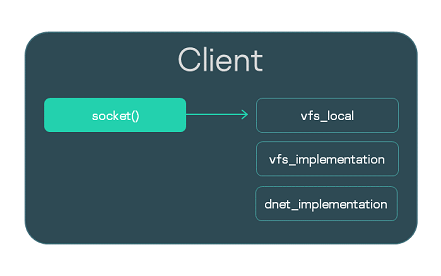

Let's examine a Client program using Berkeley sockets. Calls made by the Client must be sent to VFS. The normal path consists of starting a separate VFS process and creating an IPC channel. Alternatively, you can integrate VFS functionality directly into the Client executable file. To do so, when building the Client executable file, you need to link it to the vfs_local library that will receive calls, and link it to the implementation libraries vfs_implementation and dnet_implementation.

Local linking with VFS is convenient during debugging. In addition, calls for working with the network can be handled much faster due to the exclusion of IPC calls. Nevertheless, insulation of the VFS in a separate process and IPC interaction with it is always recommended as a more secure approach.

Below is a build script for the Client executable file.

CMakeLists.txt

project (client)

include (platform/nk)

# Set compile flags

project_header_default ("STANDARD_GNU_11:YES" "STRICT_WARNINGS:NO")

# Generates the Client.edl.h file

nk_build_edl_files (client_edl_files NK_MODULE "client" EDL "${CMAKE_SOURCE_DIR}/resources/edl/Client.edl")

add_executable (Client "src/client.c")

add_dependencies (Client client_edl_files)

# Linking with VFS libraries

target_link_libraries (Client ${vfs_LOCAL_LIB} ${vfs_IMPLEMENTATION_LIB} ${dnet_IMPLEMENTATION_LIB}

If the Client uses file systems, it must also be linked to the vfs_fs library and to the implementation of the utilized file system in addition to its linking to vfs_local. You also need to add a block device driver to the solution.

Overview: arguments and environment variables of VFS

VFS arguments

-l <entry in fstab format>The

-largument lets you mount the file system.-f <path to fstab file>The

-fargument lets you pass the file containing entries in fstab format for mounting file systems. The ROMFS storage will be searched for the file. If theUMNAP_ROMFSvariable is defined, the file system mounted using theROOTFSvariable will be searched for the file.

Example of using the -l and -f arguments

VFS environment variables

UNMAP_ROMFSIf the

UNMAP_ROMFSvariable is defined, the ROMFS storage will be deleted. This helps conserve memory and change behavior when using the-fargument.ROOTFS = <entry in fstab format>The

ROOTFSvariable lets you mount a file system to the root directory. In combination with theUNMAP_ROMFSvariable and the-fargument, it lets you search for the fstab file in the mounted file system instead of in the ROMFS storage. ROOTFS usage exampleVFS_CLIENT_MAX_THREADSThe

VFS_CLIENT_MAX_THREADSenvironment variable lets you redefine the SDK configuration parameterVFS_CLIENT_MAX_THREADSduring VFS startup.-

_VFS_NETWORK_BACKEND=<backend name>:<name of the IPC channel to VFS>

The _VFS_NETWORK_BACKEND variable defines the backend used for network calls. You can specify the name of a standard backend such as client, server or local, and the name of a custom backend. If the local backend is used, the name of the IPC channel is not specified (_VFS_NETWORK_BACKEND=local:). You can specify two or more IPC channels by separating them with a comma.

_VFS_FILESYSTEM_BACKEND=<backend name>:<name of the IPC channel to VFS>The

_VFS_FILESYSTEM_BACKENDvariable defines the backend used for file calls. The backend name and name of the IPC channel to VFS are defined the same as way as they were for the_VFS_NETWORK_BACKENDvariable.

Default values

For the VfsRamFs executable file:

ROOTFS = ramdisk0,0 / ext4 0

VFS_FILESYSTEM_BACKEND = server:kl.VfsRamFs

For the VfsSdCardFs executable file:

ROOTFS = mmc0,0 / fat32 0

VFS_FILESYSTEM_BACKEND = server:kl.VfsSdCardFs

-l nodev /tmp ramfs 0

-l nodev /var ramfs 0

For the VfsNet executable file:

VFS_NETWORK_BACKEND = server:kl.VfsNet

VFS_FILESYSTEM_BACKEND = server:kl.VfsNet

-l devfs /dev devfs 0

Mounting a file system at startup

When the VFS process starts, only the RAMFS file system is mounted to the root directory by default. If you need to mount other file systems, this can be done not only by using the mount() call after the VFS starts but can also be done immediately when the VFS process starts by passing the necessary arguments and environment variables to it.

Let's examine three examples of mounting file systems at VFS startup. The Env program is used to pass arguments and environment variables to the VFS process.

Mounting with the -l argument

A simple way to mount a file system is to pass the -l <entry in fstab format> argument to the VFS process.

In this example, the devfs and romfs file systems will be mounted when the process named Vfs1 is started.

env.c

int main(int argc, char** argv)

{

const char* Vfs1Args[] = {

"-l", "devfs /dev devfs 0",

"-l", "romfs /etc romfs 0"

};

ENV_REGISTER_ARGS("Vfs1", Vfs1Args);

envServerRun();

return EXIT_SUCCESS;

}

Mounting with fstab from ROMFS

If an fstab file is added when building a solution, the file will be available through the ROMFS storage after startup. It can be used for mounting by passing the -f <path to fstab file> argument to the VFS process.

In this example, the file systems defined via the fstab file that was added during the solution build will be mounted when the process named Vfs2 is started.

env.c

int main(int argc, char** argv)

{

const char* Vfs2Args[] = { "-f", "fstab" };

ENV_REGISTER_ARGS("Vfs2", Vfs2Args);

envServerRun();

return EXIT_SUCCESS;

}

Mounting with an external fstab

Let's assume that the fstab file is located on a drive and not in the ROMFS image of the solution. To use it for mounting, you need to pass the following arguments and environment variables to VFS:

ROOTFS. This variable lets you mount the file system containing the fstab file into the root directory.UNMAP_ROMFS. If this variable is defined, the ROMFS storage is deleted. As a result, the fstab file will be sought in the file system mounted using theROOTFSvariable.-f. This argument is used to define the path to the fstab file.

In the next example, the ext2 file system containing the /etc/fstab file used for mounting additional file systems will be mounted to the root directory when the process named Vfs3 starts. The ROMFS storage will be deleted.

env.c

int main(int argc, char** argv)

{

const char* Vfs3Args[] = { "-f", "/etc/fstab" };

const char* Vfs3Envs[] = {

"ROOTFS=ramdisk0,0 / ext2 0",

"UNMAP_ROMFS=1"

};

ENV_REGISTER_PROGRAM_ENVIRONMENT("Vfs3", Vfs3Args, Vfs3Envs);

envServerRun();

return EXIT_SUCCESS;

}

Using VFS backends to separate file calls and network calls

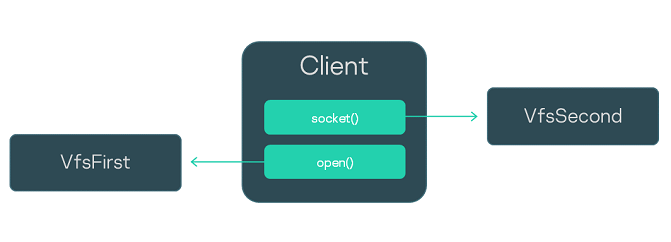

This example shows a secure development pattern that separates network data streams from file data streams.

Let's examine a Client program using file systems and Berkeley sockets. To handle its calls, we will start not one but two separate VFS processes from the VfsFirst and VfsSecond executable files. We will use environment variables to assign the file backends to work via the channel to VfsFirst and assign the network backends to work via the channel to VfsSecond. We will use the standard backends client and server. This way, we will redirect the file calls of the Client to VfsFirst and redirect the network calls to VfsSecond. To pass the environment variables to processes, we will add the Env program to the solution.

The init description of the solution is provided below. The Client process will be connected to the VfsFirst and VfsSecond processes, and each of the three processes will be connected to the Env process. Please note that the name of the IPC channel to the Env process is defined by using the ENV_SERVICE_NAME variable.

init.yaml

entities:

- name: Env

- name: Client

connections:

- target: Env

id: {var: ENV_SERVICE_NAME, include: env/env.h}

- target: VfsFirst

id: VFS1

- target: VfsSecond

id: VFS2

- name: VfsFirst

connections:

- target: Env

id: {var: ENV_SERVICE_NAME, include: env/env.h}

- name: VfsSecond

connections:

- target: Env

id: {var: ENV_SERVICE_NAME, include: env/env.h}

To send all file calls to VfsFirst, we define the value of the _VFS_FILESYSTEM_BACKEND environment variable as follows:

- For

VfsFirst:_VFS_FILESYSTEM_BACKEND=server:<name of the IPC channel to VfsFirst> - For

Client:_VFS_FILESYSTEM_BACKEND=client:<name of the IPC channel to VfsFirst>

To send network calls to VfsSecond, we use the equivalent _VFS_NETWORK_BACKEND environment variable:

- We define the following for

VfsSecond:_VFS_NETWORK_BACKEND=server:<name of the IPC channel to the VfsSecond> - We define the following for the

Client:_VFS_NETWORK_BACKEND=client: <name of the IPC channel to the VfsSecond>

We define the value of environment variables through the Env program, which is presented below.

env.c

int main(void)

{

const char* vfs_first_envs[] = { "_VFS_FILESYSTEM_BACKEND=server:VFS1" };

ENV_REGISTER_VARS("VfsFirst", vfs_first_envs);

const char* vfs_second_envs[] = { "_VFS_NETWORK_BACKEND=server:VFS2" };

ENV_REGISTER_VARS("VfsSecond", vfs_second_envs);

const char* client_envs[] = { "_VFS_FILESYSTEM_BACKEND=client:VFS1", "_VFS_NETWORK_BACKEND=client:VFS2" };

ENV_REGISTER_VARS("Client", client_envs);

envServerRun();

return EXIT_SUCCESS;

}

Writing a custom VFS backend

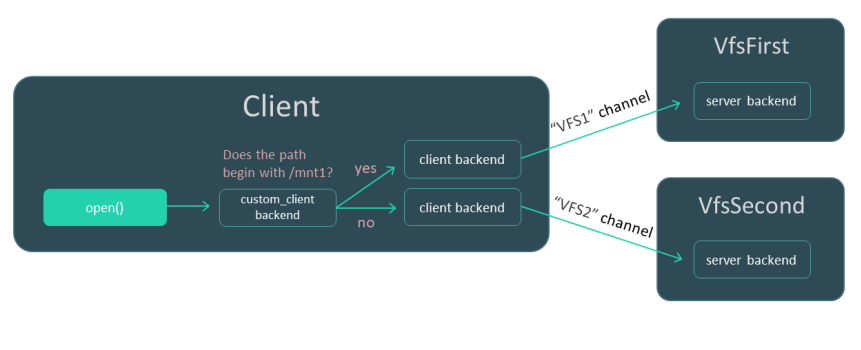

This example shows how to change the logic for handling file calls using a special VFS backend.

Let's examine a solution that includes the Client, VfsFirst and VfsSecond processes. Let's assume that the Client process is connected to VfsFirst and VfsSecond using IPC channels.

Our task is to ensure that queries from the Client process to the fat32 file system are handled by the VfsFirst process, and queries to the ext4 file system are handled by the VfsSecond process. To accomplish this task, we can use the VFS backend mechanism and will not even need to change the code of the Client program.

We will write a custom backend named custom_client, which will send calls via the VFS1 or VFS2 channel depending on whether or not the file path begins with /mnt1. To send calls, custom_client will use the standard backends of the client. In other words, it will act as a proxy backend.

We use the -l argument to mount fat32 to the /mnt1 directory for the VfsFirst process and mount ext4 to /mnt2 for the VfsSecond process. (It is assumed that VfsFirst contains a fat32 implementation and VfsSecond contains an ext4 implementation.) We use the _VFS_FILESYSTEM_BACKEND environment variable to define the backends (custom_client and server) and IPC channels (VFS1 and VFS2) to be used by the processes.

Then we use the init description to define the names of the IPC channels: VFS1 and VFS2.

This is examined in more detail below:

- Code of the

custom_clientbackend. - Linking of the

Clientprogram and thecustom_clientbackend. Envprogram code.- Init description.

Writing a custom_client backend

This file contains an implementation of the proxy custom backend that relays calls to one of the two standard client backends. The backend selection logic depends on the utilized path or on the file handle and is managed by additional data structures.

backend.c

/* Code for managing file handles. */

struct entry

{

Handle handle;

bool is_vfat;

};

struct fd_array

{

struct entry entries[MAX_FDS];

int pos;

pthread_rwlock_t lock;

};

struct fd_array fds = { .pos = 0, .lock = PTHREAD_RWLOCK_INITIALIZER };

int insert_entry(Handle fd, bool is_vfat)

{

pthread_rwlock_wrlock(&fds.lock);

if (fds.pos == MAX_FDS)

{

pthread_rwlock_unlock(&fds.lock);

return -1;

}

fds.entries[fds.pos].handle = fd;

fds.entries[fds.pos].is_vfat = is_vfat;

fds.pos++;

pthread_rwlock_unlock(&fds.lock);

return 0;

}

struct entry *find_entry(Handle fd)

{

pthread_rwlock_rdlock(&fds.lock);

for (int i = 0; i < fds.pos; i++)

{

if (fds.entries[i].handle == fd)

{

pthread_rwlock_unlock(&fds.lock);

return &fds.entries[i];

}

}

pthread_rwlock_unlock(&fds.lock);

return NULL;

}

/* Custom backend structure. */

struct context

{

struct vfs wrapper;

pthread_rwlock_t lock;

struct vfs *vfs_vfat;

struct vfs *vfs_ext4;

};

struct context ctx =

{

.wrapper =

{

.dtor = _vfs_backend_dtor,

.disconnect_all_clients = _disconnect_all_clients,

.getstdin = _getstdin,

.getstdout = _getstdout,

.getstderr = _getstderr,

.open = _open,

.read = _read,

.write = _write,

.close = _close,

}

};

/* Implementation of custom backend methods. */

static bool is_vfs_vfat_path(const char *path)

{

char vfat_path[5] = "/mnt1";

if (memcmp(vfat_path, path, sizeof(vfat_path)) != 0)

return false;

return true;

}

static void _vfs_backend_dtor(struct vfs *vfs)

{

ctx.vfs_vfat->dtor(ctx.vfs_vfat);

ctx.vfs_ext4->dtor(ctx.vfs_ext4);

}

static void _disconnect_all_clients(struct vfs *self, int *error)

{

(void)self;

(void)error;

ctx.vfs_vfat->disconnect_all_clients(ctx.vfs_vfat, error);

ctx.vfs_ext4->disconnect_all_clients(ctx.vfs_ext4, error);

}

static Handle _getstdin(struct vfs *self, int *error)

{

(void)self;

Handle handle = ctx.vfs_vfat->getstdin(ctx.vfs_vfat, error);

if (handle != INVALID_HANDLE)

{

if (insert_entry(handle, true))

{

*error = ENOMEM;

return INVALID_HANDLE;

}

}

return handle;

}

static Handle _getstdout(struct vfs *self, int *error)

{

(void)self;

Handle handle = ctx.vfs_vfat->getstdout(ctx.vfs_vfat, error);

if (handle != INVALID_HANDLE)

{

if (insert_entry(handle, true))

{

*error = ENOMEM;

return INVALID_HANDLE;

}

}

return handle;

}

static Handle _getstderr(struct vfs *self, int *error)

{

(void)self;

Handle handle = ctx.vfs_vfat->getstderr(ctx.vfs_vfat, error);

if (handle != INVALID_HANDLE)

{

if (insert_entry(handle, true))

{

*error = ENOMEM;

return INVALID_HANDLE;

}

}

return handle;

}

static Handle _open(struct vfs *self, const char *path, int oflag, mode_t mode, int *error)

{

(void)self;

Handle handle;

bool is_vfat = false;

if (is_vfs_vfat_path(path))

{

handle = ctx.vfs_vfat->open(ctx.vfs_vfat, path, oflag, mode, error);

is_vfat = true;

}

else

handle = ctx.vfs_ext4->open(ctx.vfs_ext4, path, oflag, mode, error);

if (handle == INVALID_HANDLE)

return INVALID_HANDLE;

if (insert_entry(handle, is_vfat))

{

if (is_vfat)

ctx.vfs_vfat->close(ctx.vfs_vfat, handle, error);

*error = ENOMEM;

return INVALID_HANDLE;

}

return handle;

}

static ssize_t _read(struct vfs *self, Handle fd, void *buf, size_t count, bool *nodata, int *error)

{

(void)self;

struct entry *found_entry = find_entry(fd);

if (found_entry != NULL && found_entry->is_vfat)

return ctx.vfs_vfat->read(ctx.vfs_vfat, fd, buf, count, nodata, error);

return ctx.vfs_ext4->read(ctx.vfs_ext4, fd, buf, count, nodata, error);

}

static ssize_t _write(struct vfs *self, Handle fd, const void *buf, size_t count, int *error)

{

(void)self;

struct entry *found_entry = find_entry(fd);

if (found_entry != NULL && found_entry->is_vfat)

return ctx.vfs_vfat->write(ctx.vfs_vfat, fd, buf, count, error);

return ctx.vfs_ext4->write(ctx.vfs_ext4, fd, buf, count, error);

}

static int _close(struct vfs *self, Handle fd, int *error)

{

(void)self;

struct entry *found_entry = find_entry(fd);

if (found_entry != NULL && found_entry->is_vfat)

return ctx.vfs_vfat->close(ctx.vfs_vfat, fd, error);

return ctx.vfs_ext4->close(ctx.vfs_ext4, fd, error);

}

/* Custom backend builder. ctx.vfs_vfat and ctx.vfs_ext4 are initialized

* as standard backends named "client". */

static struct vfs *_vfs_backend_create(Handle client_id, const char *config, int *error)

{

(void)config;

ctx.vfs_vfat = _vfs_init("client", client_id, "VFS1", error);

assert(ctx.vfs_vfat != NULL && "Can't initialize client backend!");

assert(ctx.vfs_vfat->dtor != NULL && "VFS FS backend has not set the destructor!");

ctx.vfs_ext4 = _vfs_init("client", client_id, "VFS2", error);

assert(ctx.vfs_ext4 != NULL && "Can't initialize client backend!");

assert(ctx.vfs_ext4->dtor != NULL && "VFS FS backend has not set the destructor!");

return &ctx.wrapper;

}

/* Registration of the custom backend under the name custom_client. */

static void _vfs_backend(create_vfs_backend_t *ctor, const char **name)

{

*ctor = &_vfs_backend_create;

*name = "custom_client";

}

REGISTER_VFS_BACKEND(_vfs_backend)

Linking of the Client program and the custom_client backend

Compile the written backend into a library:

CMakeLists.txt

add_library (backend_client STATIC "src/backend.c")

Link the prepared backend_client library to the Client program:

CMakeLists.txt (fragment)

add_dependencies (Client vfs_backend_client backend_client)

target_link_libraries (Client

pthread

${vfs_CLIENT_LIB}

"-Wl,--whole-archive" backend_client "-Wl,--no-whole-archive" backend_client

)

Writing the Env program

We use the Env program to pass arguments and environment variables to processes.

env.c

int main(int argc, char** argv)

{

/* Mount fat32 to /mnt1 for the VfsFirst process and mount ext4 to /mnt2 for the VfsSecond process. */

const char* VfsFirstArgs[] = {

"-l", "ahci0 /mnt1 fat32 0"

};

ENV_REGISTER_ARGS("VfsFirst", VfsFirstArgs);

const char* VfsSecondArgs[] = {

"-l", "ahci1 /mnt2 ext4 0"

};

ENV_REGISTER_ARGS("VfsSecond", VfsSecondArgs);

/* Define the file backends. */

const char* vfs_first_args[] = { "_VFS_FILESYSTEM_BACKEND=server:VFS1" };

ENV_REGISTER_VARS("VfsFirst", vfs_first_args);

const char* vfs_second_args[] = { "_VFS_FILESYSTEM_BACKEND=server:VFS2" };

ENV_REGISTER_VARS("VfsSecond", vfs_second_args);

const char* client_fs_envs[] = { "_VFS_FILESYSTEM_BACKEND=custom_client:VFS1,VFS2" };

ENV_REGISTER_VARS("Client", client_fs_envs);

envServerRun();

return EXIT_SUCCESS;

}

Editing init.yaml

For the IPC channels that connect the Client process to the VfsFirst and VfsSecond processes, you must define the same names that you specified in the _VFS_FILESYSTEM_BACKEND environment variable: VFS1 and VFS2.

init.yaml

entities:

- name: vfs_backend.Env

- name: vfs_backend.Client

connections:

- target: vfs_backend.Env

id: {var: ENV_SERVICE_NAME, include: env/env.h}

- target: vfs_backend.VfsFirst

id: VFS1

- target: vfs_backend.VfsSecond

id: VFS2

- name: vfs_backend.VfsFirst

connections:

- target: vfs_backend.Env

id: {var: ENV_SERVICE_NAME, include: env/env.h}

- name: vfs_backend.VfsSecond

connections:

- target: vfs_backend.Env

id: {var: ENV_SERVICE_NAME, include: env/env.h}